Life

This Trending Face App Reveals How AI Has A Race Problem

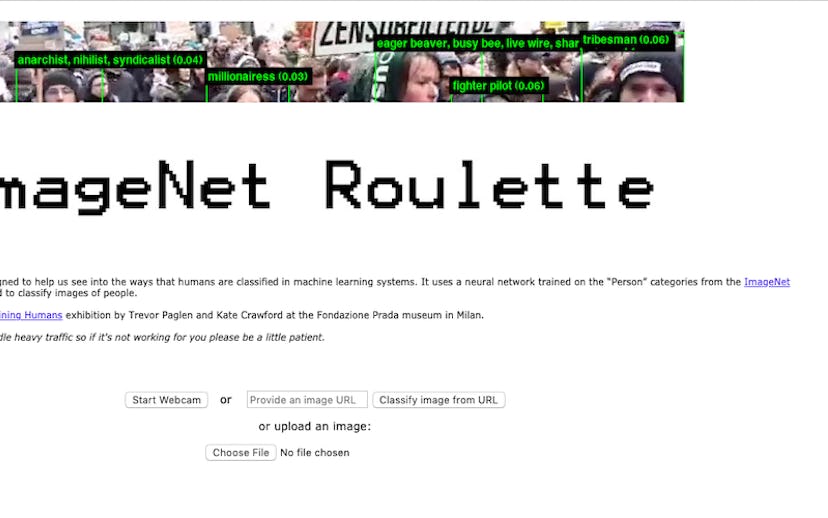

ImageNet Roulette shows how computers are racist, too

The latest selfie-based app that has swept the internet is showing how AI can be overtly racist if trained using bad data. ImageNet Roulette asks users to upload a photo of their face and, in turn, it provides a definition of what is supposedly in the picture based on interpretation by AI. For some, it feels like harmless fun, giving hilariously strange and rude answers. For others—specifically, people of color—it's often churning out slurs and racist descriptions.

For example, if you put in a photo of Kacey Musgraves, in return, the app shows her face outlined by a green box labeled "enchantress, witch."

Below, ImageNet Roulette breaks down the hierarchy of terminology the AI used to land on this particular definition. From something as basic as "person, individual, someone, somebody, mortal, soul" it led to "occultist" which then led to "enchantress, witch."

Per a description listed on the site, ImageNet "contains a number of problematic, offensive and bizarre categories—all drawn from WordNet," which is a Princeton database of word classifications dating back to the '80s that ImageNet's algorithm was trained to use to match the photos to the tags. It continues, "Some use misogynistic or racist terminology. Hence, the results ImageNet Roulette returns will also draw upon those categories. That is by design: we want to shed light on what happens when technical systems are trained on problematic training data. AI classifications of people are rarely made visible to the people being classified."

But, when the app went viral, it wasn't with this context—though each individual could have read the fine print description; everyone just wanted to get in on the fun of being read by their selfies. Some of the reads were admittedly brutally funny—for me, using old selfies produced the labels "an unmarried girl (especially a virgin)." But other tags, as became apparent on Twitter, were outright offensive. For example, Julia Carrie Wong, a reporter for The Guardian, was assigned racial slurs.

"Feeling kind of sad and unsettled because a robot was racist to you is a weird and stupid feeling and I hate it," she wrote in her replies, warning that "it's not necessarily going to be haha funny if you are a POC and put your pic in."

BuzzFeed News notes that this sort of bad training data in AI has real-world implications. "WordNet is used in machine translation and crossword puzzle apps. And ImageNet is currently being used by at least one academic research project," per BuzzFeed.

Kate Crawford, AI researcher and one half of the duo that created ImageNet Roulette, explained her reasoning for creating the app in the first place in a series of tweets earlier this week. "ImageNet is one of the most significant training sets in the history of AI," Crawford wrote, reiterating how WordNet and ImageNet work on a basic level. ImageNet Roulette "reveals the deep problems with classifying humans—be it race, gender, emotions or characteristics. It's politics all the way down, and there's no simple way to 'debias' it," she concluded.

After the app went viral, or "nuts" as Crawford described it, she followed up, "Good to see this simple interface generate an international critical discussion about the race & gender politics of classification in AI, and how training data can harm." She then linked back to Wong's reportage on the app.